ChatGPT and other artificial intelligence writing tools are becoming increasingly popular among medical writers exploring the power of AI – for many reasons. I find ChatGPT to be a fun, easy-to-use and helpful tool. For medical writers, it helps with writer’s block, creativity and idea generation.

But while it may seem like AI writing tools can do everything a writer can and more, and you may think they will one day take over your job entirely, don’t stress just yet. There are plenty of limitations when relying on AI-generated medical content. Here are a few of them.

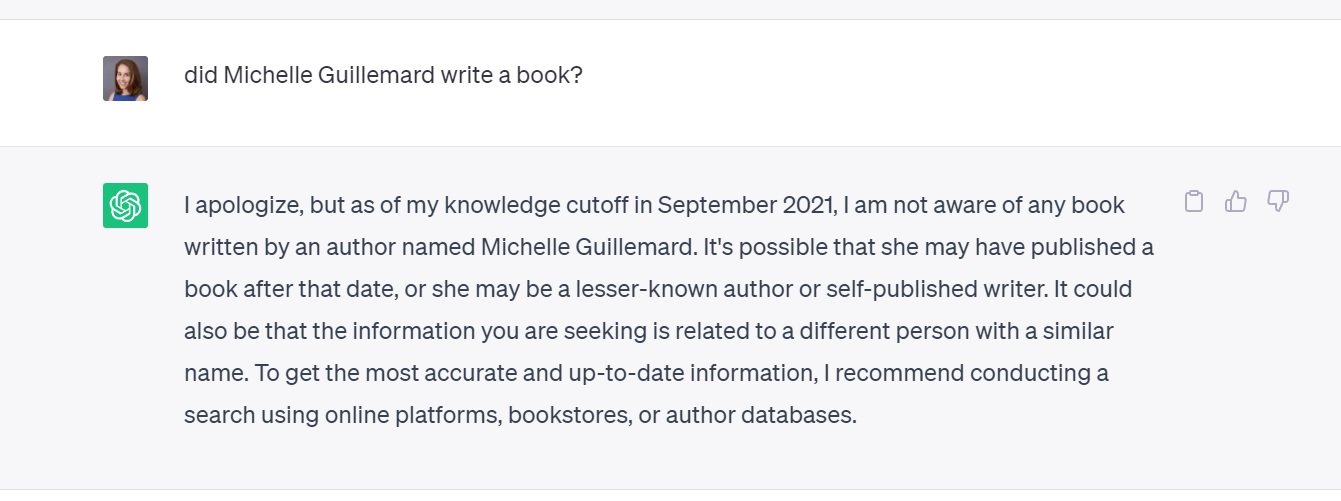

1. ChatGPT does not access the internet

Until I wrote this blog, I assumed ChatGPT was like a rapid Google search, compiling answers to my questions at the speed of light. But the current free version of ChatGPT doesn’t provide any information in real time. That’s why you can’t ask it questions about events happening today, right now. This brings us to the next point.

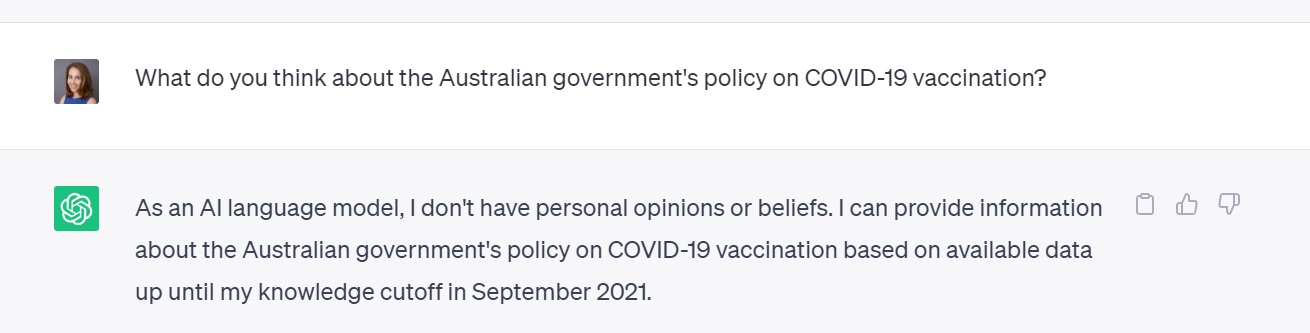

2. ChatGPT lacks real-time information

If you’re experimenting with AI-driven medical writing, you need to know that ChatGPT’s data only goes up to September 2021 (in the current free version). That means it can only compile information and data up to that time. Expect this to change soon, but for the moment, it’s lacking nearly two years of data. So, you can’t ask it to write about new medical research or emerging medical trends. According to the system, everything it generates is pre-September 2021, so it could already be old or outdated.

3. ChatGPT can’t tell real-life stories

3. ChatGPT can’t tell real-life stories

ChatGPT can’t write personal stories with facts not included in its database. That means there will always be a place for storytelling, expert interviews and anecdotes in health writing. It can’t get inside people’s heads! It can only rely on that data up to September 2021.

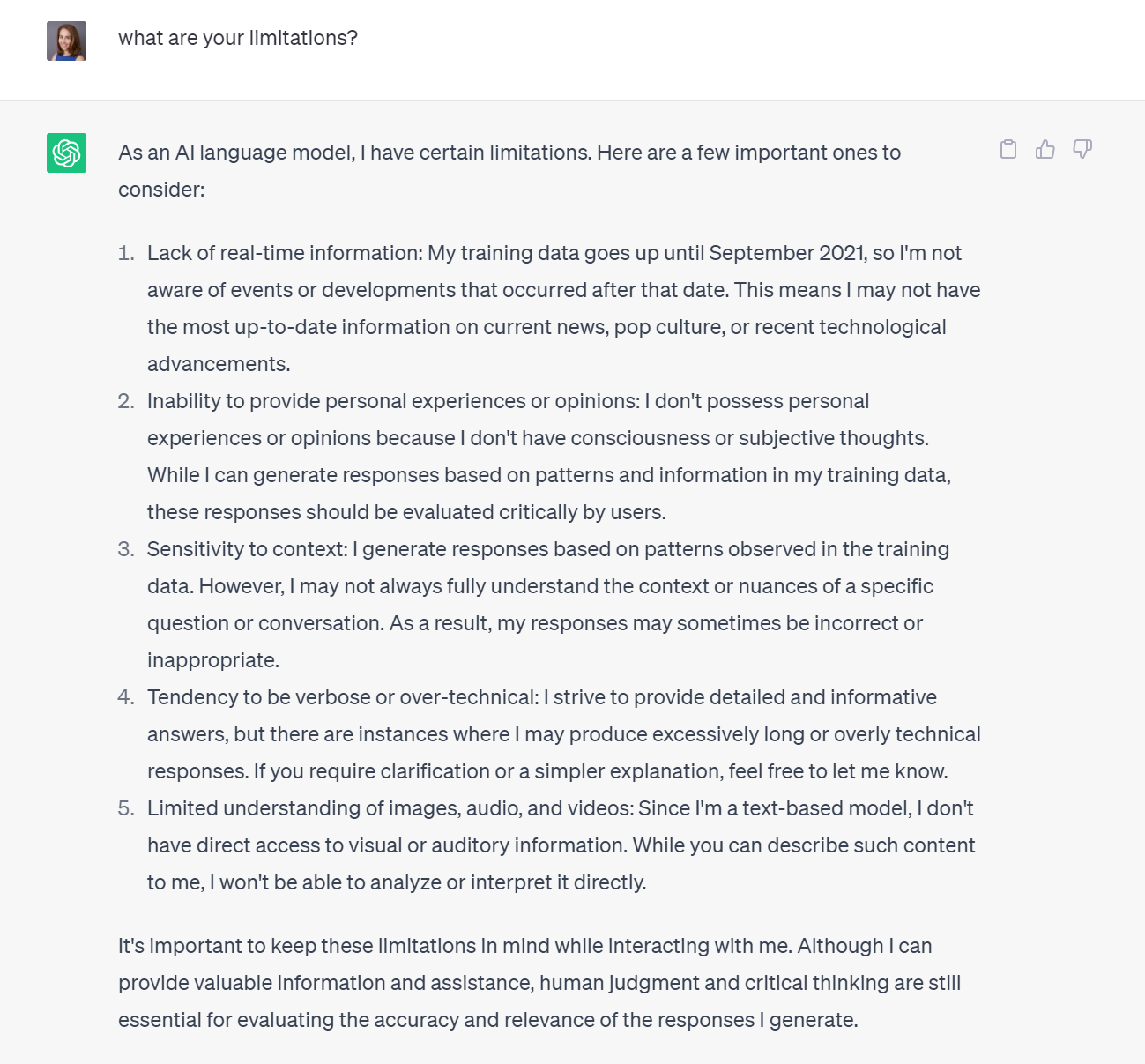

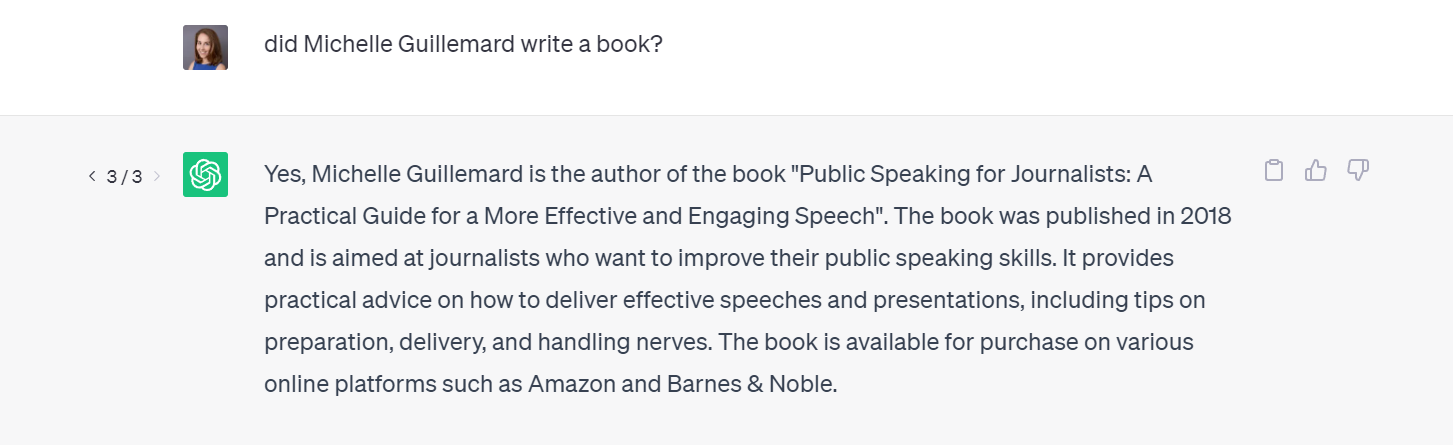

4. ChatGPT produces inaccurate information

Of course, the main limitation of ChatGPT is that it is not human and doesn’t have a brain. That means it can and will produce answers that don’t make sense.

Here’s an example. A few weeks ago, ChatGPT said I had written a book about health writing (I never have, but it is an exciting idea!). Here’s the response it gave when I asked it if I had ever written a book.

So, a few weeks later, while writing this post, I asked the same question again and got a completely different answer. These different answers show that fact-checking will become even more essential in a health writer’s toolkit as we rely on these AI models to generate content. We can only trust some things they write, and there will always need to be a quality check on the content.

5. ChatGPT doesn’t have opinions, and it can’t make decisions

When you ask ChatGPT its opinion about an issue, it can’t answer. The tool can provide general information but can’t make decisions or suggestions for you.

6. It doesn’t have different tones or expressions

ChatGPT can’t use varied emotions to discuss different topics. The language is friendly but relatively formal at times. There is no real personality, as the writing follows pre-determined language rules without room for creativity.

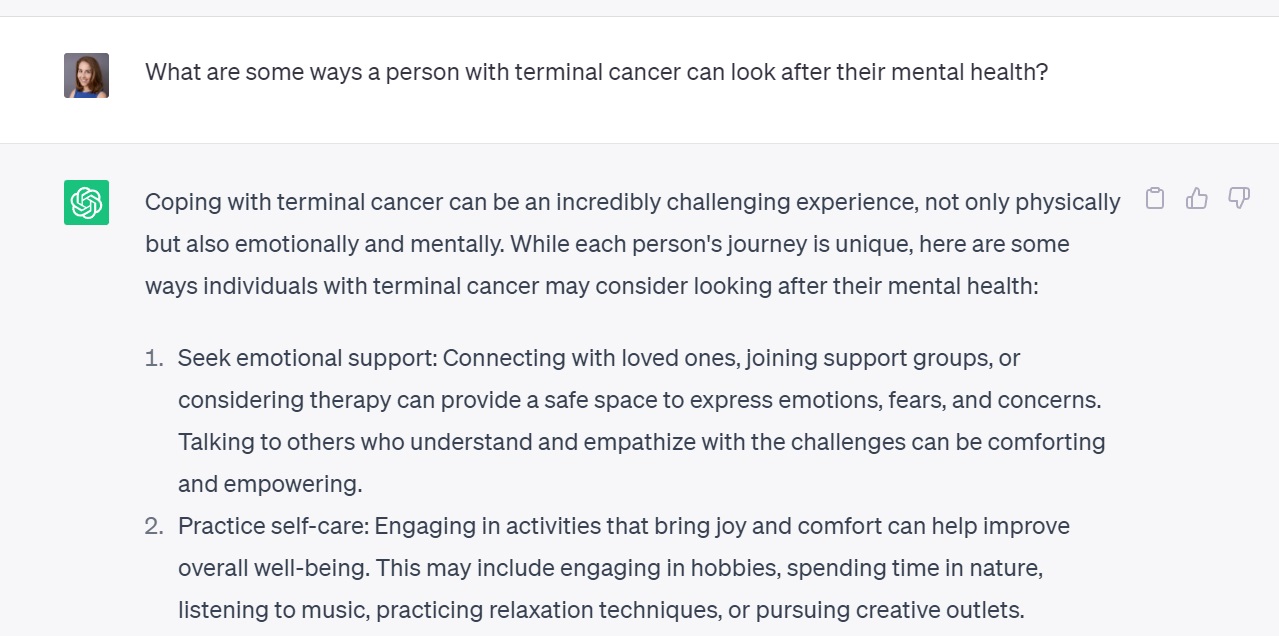

It’s important to understand that different audiences require different tones in medical writing. The tone of voice in medical writing is an essential engagement tool. You wouldn’t write to a person with cancer using a cold, clinical tone, as that would upset them. We need to use empathy and understanding in our writing for maximum engagement.

Similarly, targeted content is more engaging than generic content, but ChatGPT can’t target content to different types of readers effectively.

ChatGPT will provide answers with a certain level of empathy, such as in the example below. However, the tool tends to use more straightforward language. It doesn’t target different audiences or use overly expressive language.

Other ChatGPT limitations

There are many other limitations of this new technology, including:

- It only accepts questions in text format

- It can’t respond to long text questions or descriptions, only short questions

- It can’t answer more than one question at the same time

- It can’t give in-depth answers – it usually only gives short generic summaries

- It can’t format content or add headlines or bullet lists

- It can’t add links to relevant content

So, don’t be frightened of this new technology just yet. There will be more to come in this space, but for now, why not enjoy using it in your writing processes like you would with other tools like Grammarly that help boost your productivity?

Knowing its limitations and being aware of the potential issues and flaws will ensure you continue to produce high-quality, healthy writing in your work and for your clients.